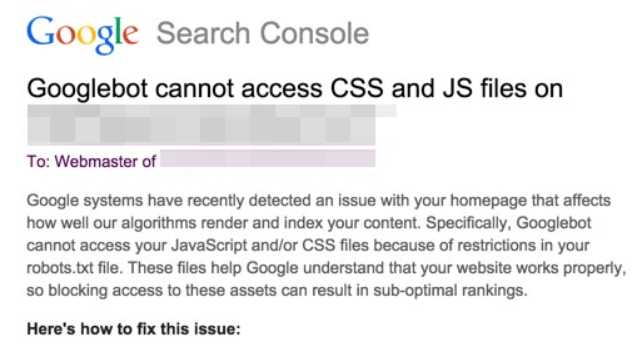

If you’ve received a warning from Google Search Console stating, “Googlebot cannot access CSS and JS files,” it’s time to take action. This error can prevent Googlebot from properly crawling your website. Which might affect how your site appears in search results. Fortunately, fixing this issue is straightforward. And with the right steps, you can ensure that Googlebot has the access it needs to all the important files on your website.

In this guide, we’ll walk you through why this error occurs, how to resolve it, and the steps you can take to prevent it from happening in the future. By the end of this tutorial, you’ll have a better understanding of how to manage your site’s Robots.txt file and how to make sure your CSS and JavaScript files are accessible to Googlebot.

What is the Googlebot?

Googlebot is Google’s web-crawling bot, also known as a spider. Its primary job is to crawl the internet and index web pagesIn WordPress, a page is a content type that is used to create non-dynamic pages on a website. Pages are typica… More, making them discoverable in Google Search. When Googlebot visits your website, it reads the content, structure, and other elements to understand and rank your site effectively. This process is integral for SEO, as it determines how your site will appear in search engine results.

Why CSS and JS Files are Important

CSS (Cascading Style Sheets) and JS (JavaScript) files are crucial for rendering and functionality of modern websites. CSS files control the visual presentation of your site, including layout, colors, fonts, and overall design. JS files, on the other hand, handle dynamic content, user interactions, and other functionalities that make your site interactive and engaging. When Googlebot accesses your site, it needs to see it as a user would. If it cannot load CSS and JS files, it may not render your site accurately. This incomplete rendering can lead to incorrect indexing and potentially lower search rankings because Googlebot might misinterpret the user experience and functionality of your site.

Why Does Googlebot Need Access to CSS and JS Files?

Googlebot is responsible for crawling your website and indexing its content for search engine rankings. However, if Googlebot can’t access your site’s CSS and JavaScript (JS) files, it won’t be able to render your pages correctly. These files are crucial for the layout and functionality of your site, and if Googlebot can’t see them, it might misinterpret the design or content structure, which can negatively impact your SEO.

For instance, if your site is responsive, but Googlebot can’t access the CSS files that define its responsiveness, Google might not recognize that your site is mobile-friendly. Similarly, if JavaScript is used to load important content, Googlebot won’t be able to see this content if it can’t access the JS files.

How to Give Google Access to Your CSS and JS Files

Ensuring that Googlebot can access your CSS and JS files is essential for proper indexing. The most common reason Googlebot can’t access these files is due to restrictions in the Robots.txt file, which tells search engines which parts of your site they are allowed to crawl. To fix this, you need to make sure that your Robots.txt file doesn’t block access to your CSS and JS directories.

Check If Googlebot Can Access Your CSS and JS Files

Before making any changes, it’s important to determine whether Googlebot is currently blocked from accessing your CSS and JS files. Here’s how to do it:

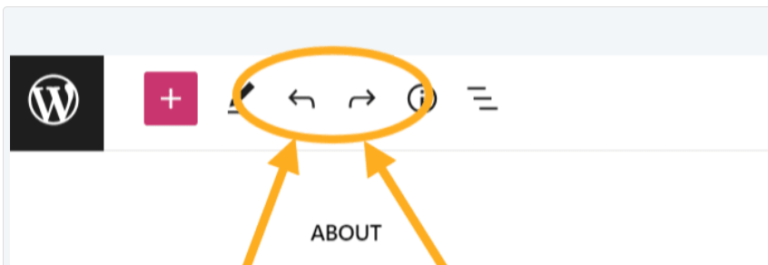

- Google Search Console:

- Go to your pluginA plugin is a software component that adds specific features and functionality to your WordPress website. Esse… More.

Troubleshooting: Fixing the “Googlebot Cannot Access CSS and JS Files” Error in WordPress

To ensure Googlebot can access your CSS and JS files, follow these steps:

Option 1: Manually Editing WordPress Robots.txt File

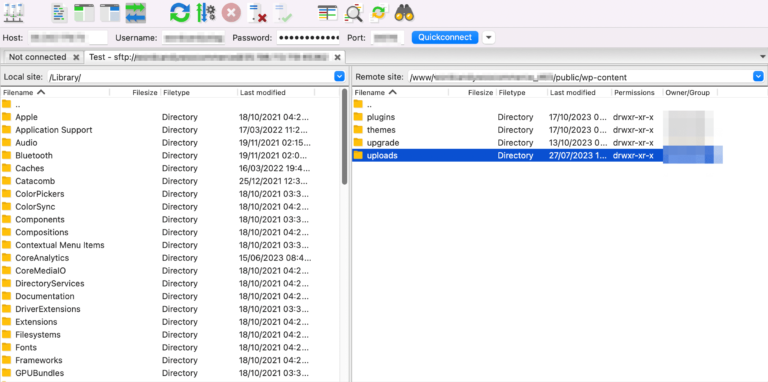

- Access the Robots.txt file: You can do this by connecting to your website via FTP or by using the File Manager in your hosting control panel.

- Edit the file: Open the Robots.txt file and look for any lines that start with “Disallow”. These lines tell search engines which directories they cannot access. If you see lines that disallow access to your CSS or JS directories, remove them. Example:

Disallow: /wp-includes/ Disallow: /wp-content/plugins/Instead, allow access like this:Allow: /wp-includes/css/ Allow: /wp-includes/js/ - Save the changes: Once you’ve made your edits, save the Robots.txt file and upload it back to the root directory of your website.

- Test your site: After editing the Robots.txt file, use the “Fetch as Google” tool in Google Search Console to check if the error has been resolved.

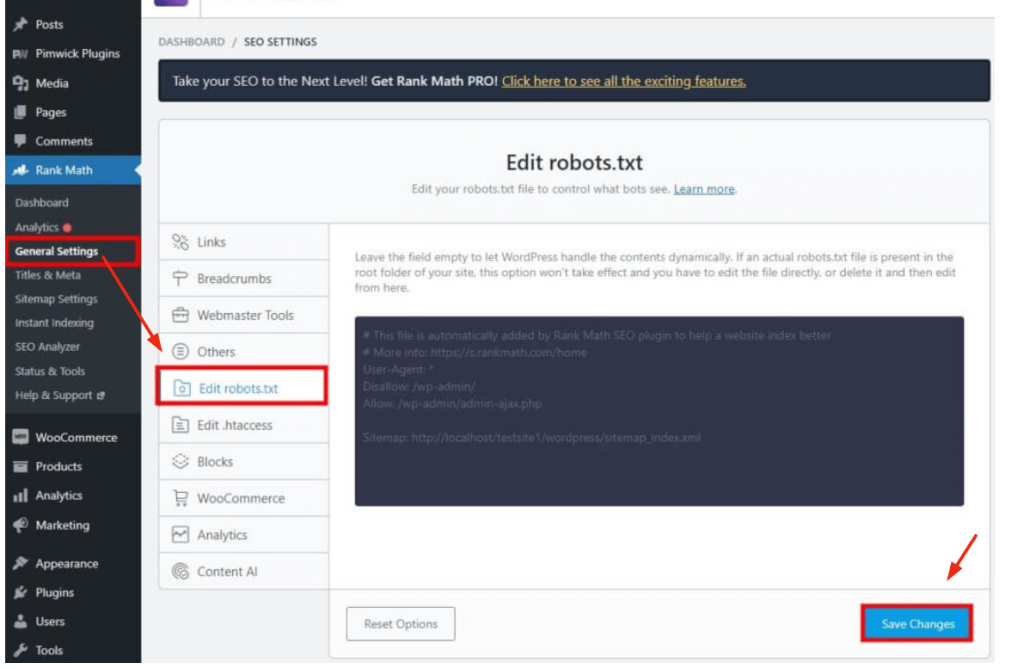

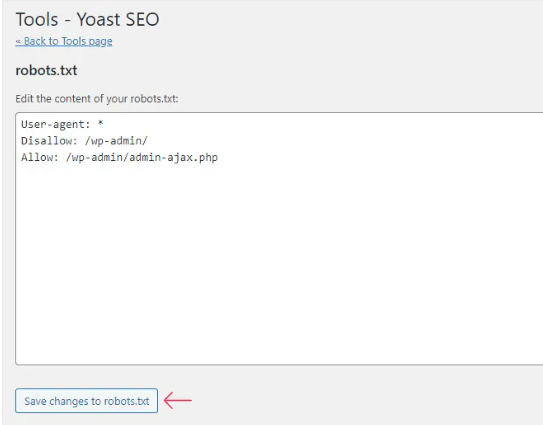

Option 2: Use a Plugin to Edit Robots.txt File

If you’re not comfortable editing files manually, you can use a plugin to manage your Robots.txt file. Here’s how:

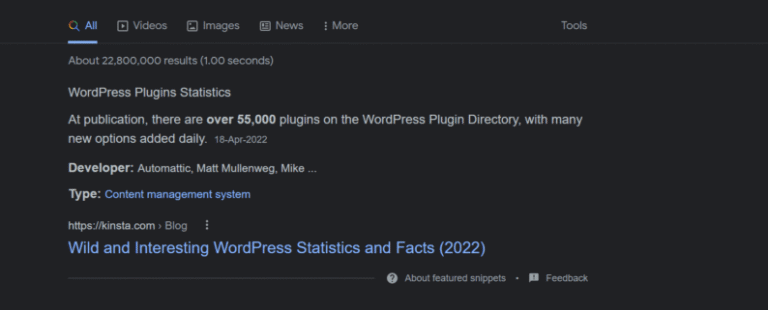

- Install a plugin: Plugins like Yoast SEO or All in One SEO offer a simple interface to edit your Robots.txt file.

- Edit the Robots.txt file: Once the plugin is installed, navigate to its settings and look for the Robots.txt editor. Make sure the editor allows access to your CSS and JS directories.

- Save and test: Save the changes and test your site using Google Search Console to ensure that Googlebot can now access your CSS and JS files.

What if I Want to Enable JavaScript in WordPress?

JavaScript plays a crucial role in modern websites, and enabling it correctly in WordPress is important for both functionality and SEO. If you want to ensure that JavaScript is fully functional on your site and accessible to Googlebot:

- Use a Properly Coded Theme: Make sure your WordPress themeA WordPress theme is a set of files that determine the design and layout of a website. It controls everything … More is well-coded and doesn’t block JavaScript files unnecessarily.

- Check Plugin Compatibility: Ensure that your plugins are not conflicting with each other or blocking JavaScript files.

- Use Plugins Like WP Rocket: Caching plugins like WP Rocket can optimize JavaScript delivery by minifying and deferring JS files, ensuring that they load correctly and don’t interfere with Googlebot’s crawling.

Conclusion

Ensuring that Googlebot can access your CSS and JS files is vital for maintaining your site’s SEO health. By following the steps outlined in this tutorial, you can resolve the “Googlebot cannot access CSS and JS files” error and prevent it from impacting your search rankings.

Remember, keeping your Robots.txt file properly configured is just one part of a broader SEO strategy. Regularly monitoring your site with tools like Google Search Console will help you catch and fix issues before they become major problems. With these best practices in place, you can ensure that Googlebot can crawl and index your site effectively, helping you achieve better visibility in search results.

If you’re looking for fast WordPress hosting and a solution to fix the ‘Googlebot cannot access CSS and JS files’ error in WordPress with done-for-you updates, check out our hosting packages by clicking the button below.

- Go to your pluginA plugin is a software component that adds specific features and functionality to your WordPress website. Esse… More.